Advertisement

Building a generative AI application isn't just about models anymore. It's about getting the right information to those models — when they need it — without retraining everything from scratch. That’s where Retrieval-Augmented Generation (RAG) comes in. RAG tools help large language models fetch accurate, up-to-date, or company-specific information in real time. If you're just starting out, choosing the right tool might feel like sorting through noise. So, let’s cut the fluff and focus on what actually works. Here are the best RAG tools that can get you going with minimal friction.

LangChain gives structure to what could otherwise feel like chaos. Think of it as the scaffolding for your generative AI app — the thing that holds everything together. It connects your language model to external data sources like PDFs, websites, or private documents and uses chains you can customize. It supports both vector store integrations and custom tool use, making it a favorite for developers who want control without building from scratch.

Previously known as GPT Index, LlamaIndex is best when you're dealing with messy or unstructured data. It helps transform your existing data (emails, docs, Notion pages, etc.) into a usable knowledge base. You don't need to reinvent your workflow, either. Its node-based approach makes it easy to track where the information came from, which helps with debugging and improving answers later.

If you want something open-source but battle-tested, Haystack checks the box. It works especially well when you're building search-based applications with RAG. One major draw is how it lets you mix and match components — retrievers, generators, databases — all with clean documentation. It’s flexible enough for researchers but polished enough for production-level deployment.

This one’s not a RAG framework, but it’s a popular vector database that plays a key role in making RAG work. Pinecone stores and indexes the embeddings that your LLM will use to retrieve context. What sets Pinecone apart is its speed and scalability. If you’re worried about latency or your dataset is growing fast, this one can handle it without breaking things.

Weaviate does more than just store vectors — it brings context and meaning into search. It uses built-in modules for classification, question answering, and even knowledge graph capabilities. That means you don’t just retrieve similar chunks of text; you can start understanding relationships between concepts. It’s a smart choice if you're planning to build apps that go beyond surface-level responses.

For developers who want something lightweight and local, Chroma is a vector store that doesn’t try to be too much. It’s good for quick prototypes, small-scale projects, or when you want something to run on your own machine. The API is simple and clean, so you’re not stuck figuring out dozens of parameters. It also integrates smoothly with LangChain, making it a solid combo for basic RAG apps.

Milvus is designed for scale. If your project involves large datasets — think millions of documents — and you want a system that can manage them without hiccups, Milvus delivers. It's highly efficient in searching through large vector spaces and can work with multiple types of embeddings. It’s used in industries where performance can’t lag, like finance and healthcare.

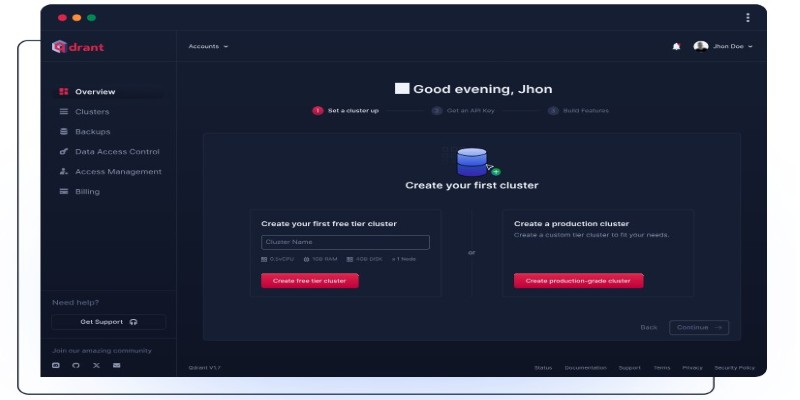

Qdrant is a high-performance vector database built for real-time applications. It focuses on delivering fast and accurate results, even with large datasets. One of its strengths is built-in filtering — you can narrow down search results using metadata like tags or categories, which helps keep your responses relevant. It supports popular embedding models and works well with frameworks like LangChain and Haystack, making it easy to slot into your existing pipeline.

Vespa is a more advanced option for those who want full control over both retrieval and ranking. It combines vector search with traditional keyword search and lets you define complex logic to decide what results show up first. It's especially useful for applications where precision matters — like legal tech or enterprise search. While it takes more effort to set up, Vespa shines when you need performance at scale with detailed control over results.

Out of all the tools, LangChain is a solid place to start. It connects your data, models, and logic into one manageable setup — without requiring you to reinvent everything. Here's how to get started.

Begin by choosing the data your app will use — maybe customer support documents or internal notes. Use LangChain’s file loaders to bring this content in. Then, embeddings with a model like OpenAI or Hugging Face can be generated and stored in a vector database like Chroma.

Once your data is in place, set up a retriever that pulls the most relevant content based on a user query. This connects to a chain that sends both the question and the retrieved context into your language model. LangChain handles the flow, so you're not manually passing inputs and outputs around.

Next comes tuning. Adjust how your content is chunked, refine prompts, and test responses. You can view what data was pulled for each question, which helps fine-tune the setup. If needed, you can expand your app by adding tools like live database access or API calls — but the basic structure stays simple: load, embed, retrieve, generate. LangChain just makes it easier to keep everything working smoothly.

Starting with RAG doesn't have to feel overwhelming. These tools were built to take the heavy lifting off your plate so you can focus on what matters — building something useful. Whether you go with LangChain, LlamaIndex, or another option depends on what you’re trying to achieve and how much flexibility you want. Begin small, keep things modular, and choose tools that grow with your idea. Once you get the basics right, the rest becomes a matter of tuning, not starting over.

Advertisement

Which machine learning tools actually help get real work done? This guide breaks down 9 solid options and shows you how to use PyTorch with clarity and control

Looking for the best places to buy or sell AI prompts? Discover the top AI prompt marketplaces of 2025 and find the right platform to enhance your AI projects

Curious how machines are learning to create original art? See how DCGAN helps generate realistic sketches and brings new ideas to creative fields

Looking for smarter ways to get answers or spark ideas? Discover 10 standout ChatGPT alternatives that offer unique features, faster responses, or a different style of interaction

What predictive AI is and how it works with real-life examples. This beginner-friendly guide explains how AI can make smart guesses using data

What is Moondream2 and how does it run on small devices? Learn how this compact vision-language model combines power and efficiency for real-time applications

What if one AI model could read text, understand images, and connect them instantly? See how GPT-4o handles it all with ease through a single API

Discover how AI and DevOps team up to boost remote work with faster delivery, smart automation, and improved collaboration

Want to measure how similar two sets of data are? Learn different ways to calculate cosine similarity in Python using scikit-learn, scipy, and manual methods

Looking to boost your chances on LinkedIn? Here are 10 ways ChatGPT can support your job search, from profile tweaks to personalized message writing

Discover 10 ChatGPT plugins designed to simplify PDF tasks like summarizing, converting, creating, and extracting text.

Which RAG tools are worth your time for generative AI? This guide breaks down the top options and shows you how to get started with the right setup