Advertisement

Machine learning can feel overwhelming if you don’t have the right tools lined up. From cleaning your data to building the model and tuning it for performance, every step has software that makes the job easier, faster, or just plain doable. But with so many tools available, how do you know which ones are truly helpful? Let’s break it down and take a look at the ones that are actually worth your time.

Scikit-learn is a go-to for classical machine learning. Whether you're running logistic regression or support vector machines, it keeps things straightforward. It's built on Python and integrates smoothly with other libraries like NumPy and pandas. If you're working on a classification, clustering, or regression task, this one gets the job done without making you feel like you need a PhD just to write a function.

TensorFlow is the kind of tool you reach for when your project steps into the deep learning zone. It’s backed by Google, and its power shows in how well it handles everything from image recognition to natural language tasks. It’s not just about building models either — it offers plenty of options for deployment and optimization. If you're dealing with large datasets or complex networks, TensorFlow gives you that needed control.

While TensorFlow can be a bit low-level for some, Keras sits on top of it and acts as a friendly guide. The syntax is clean, and building neural networks feels a lot more approachable. It’s especially helpful for experimenting with model architecture because it doesn’t drown you in configuration. For those who are just stepping into deep learning, Keras is like the entry door that doesn’t slam shut in your face.

If you prefer to see what your model is doing while training, PyTorch is the one. Dynamic computation graphs let you make changes on the fly. That’s a game-changer when you’re in the middle of troubleshooting a neural net or just testing a hunch. PyTorch is used widely in academic research, but it’s more than capable in production, too. The coding feels closer to standard Python, which helps keep things readable.

RapidMiner takes a no-code or low-code approach to machine learning. You drag and drop processes, and the tool takes care of the rest. It's great for businesses that want insights from data without getting tangled up in code. Even if you're a developer, its visual workflows can be a fast way to prototype before writing a single line. It also has built-in tools for preprocessing, validation, and deployment.

Apache Spark's MLlib fits massive datasets right in. It's designed to work in distributed environments, which means you're not stuck waiting around when you've got a billion rows to process. It supports a range of algorithms and can plug into different data sources. If scalability is your concern, and you're dealing with terabytes of data, this one has the muscle for it.

H2O.ai is built for speed and automation. It can connect with Python and R and even has its own interface for less technical users. What makes it stand out is its AutoML feature, which tries out multiple models for you and tells you which ones are performing best. For anyone who needs quick, high-performing models without the manual labor of testing every variation, H2O.ai can be a time-saver.

If setting up a local environment sounds like a hassle, Google Colab lets you run your notebooks straight from the browser. It supports GPUs and TPUs for free, which is great for deep learning projects. You can import datasets from Google Drive or GitHub, and everything's ready to go. It's perfect for collaboration, too, especially when working with teams or sharing results.

MLflow focuses on the messy middle of machine learning: experiment tracking, model versioning, and deployment. It helps keep your workflow organized and reproducible, which becomes more important as your projects scale. You can log metrics, compare runs, and transition models into production without guessing which file was the final version. It saves you from digging through old folders to find what worked.

Now that we've looked at the tools let's get practical. In this next section, we'll focus on one tool that stands out for deep learning development and hands-on flexibility: PyTorch.

Start by installing PyTorch using the right version for your system and hardware (CPU or GPU). Head over to the official PyTorch website and follow the install command they generate for you. Once installed, the first thing you’ll do is define your dataset — either by loading from something like torchvision. datasets or creating a custom class that inherits from torch.utils.data.Dataset. You’ll write your __getitem__ and __len__ methods so PyTorch knows how to fetch and organize your data during training.

Next, you’ll define a neural network by creating a class that inherits from torch.nn.Module. This is where you set up your layers in the __init__ method and define how data moves through the model in the forward method. Then comes the training loop. You'll set a loss function (like nn.CrossEntropyLoss for classification) and an optimizer (like torch.optim.Adam). Inside the loop, you’ll move your inputs and targets to the right device (CPU or GPU), zero out the gradients, run a forward pass, calculate the loss, and then call .backward() followed by .step() to update the weights.

Throughout, you’ll track metrics, maybe validate on a separate dataset, and adjust your learning rate or batch size as needed. PyTorch doesn’t hide anything from you, which is a huge plus if you want to build things exactly the way you like or try unconventional ideas without running into limitations.

When working on an ML project, the right tool can make or break how smoothly everything goes. Each tool mentioned here serves a purpose — some are good for modeling, some for deployment, and some for experiment tracking. It’s about finding the ones that match your needs and workflow. PyTorch stands out because of its flexibility and clarity, especially when you're deep into building models and need to see what's going on at every step. The real strength of a machine learning project often lies not just in the model but in how well you understand and manage the process from start to finish — and that's where these tools help.

Advertisement

Looking for the best places to buy or sell AI prompts? Discover the top AI prompt marketplaces of 2025 and find the right platform to enhance your AI projects

Need clarity in your thoughts? Learn how ChatGPT helps create mind maps and flowcharts, organizing your ideas quickly and effectively without fancy software

Curious how machines are learning to create original art? See how DCGAN helps generate realistic sketches and brings new ideas to creative fields

Want sharper, quicker AI-generated images? Adobe’s Firefly Image 3 brings improved realism, smarter editing, and more natural prompts. Discover how this AI model enhances creative workflows

Want to measure how similar two sets of data are? Learn different ways to calculate cosine similarity in Python using scikit-learn, scipy, and manual methods

Looking to boost your chances on LinkedIn? Here are 10 ways ChatGPT can support your job search, from profile tweaks to personalized message writing

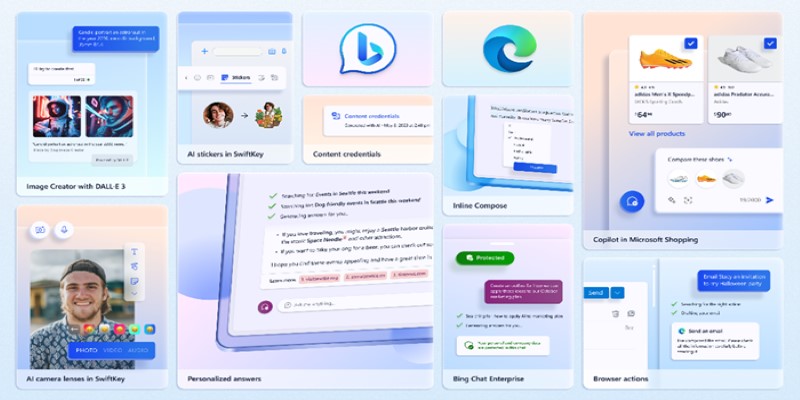

Want to turn your words into images without paying a cent? Learn how to use DALL·E 3 for free inside Microsoft Bing, with zero design skills or signups required

Veed.io makes video editing easy and fast with AI-powered tools. From auto-generated subtitles to customizable templates, create professional videos without hassle

Looking for a smart alternative to Devin AI that actually fits your workflow? Here are 8 options that help you code faster without overcomplicating the process

Looking for the best way to start learning data science without getting lost? Here are 9 beginner-friendly coding platforms that make it easy to begin and stay on track

Looking for smarter ways to get answers or spark ideas? Discover 10 standout ChatGPT alternatives that offer unique features, faster responses, or a different style of interaction

Explore hyper-personalization marketing strategies, consumer data-driven marketing, and customized customer journey optimization