Advertisement

It’s hard not to be curious when you hear about something that handles text, images, and even visual input in one go. GPT-4o does just that. It’s designed to read, write, see, and respond—all in the same breath. If you’ve used AI before, chances are it’s been limited to text. But GPT-4o steps out of that boundary and brings vision and image handling into the mix without needing multiple tools or plugins. That’s the upgrade we didn’t know we needed until now.

This model isn’t just a smarter chatbot. It works across formats, meaning it can do things like read a screenshot, answer a question based on a diagram, or generate content from a rough image sketch. That blend of visual and textual capability makes it useful in ways most AI models haven’t managed. Let’s break it down into the core ways you can use GPT-4o, especially through the API.

This is where most people start. Text is still the most common input for AI use, whether you’re building a chatbot, summarizing documents, or automating customer support.

What it handles well:

The key difference with GPT-4o is how well it understands nuance. You can feed it a paragraph of messy instructions, and it won't just spit out a reply—it reads between the lines in customer-facing tools, which saves a lot of manual filtering and correction.

Here’s where things get more interesting. GPT-4o doesn’t need a separate tool to handle vision. Upload an image, and it can recognize objects, read text from it, and describe what’s going on.

Examples of what it can do:

What sets this apart from traditional OCR tools is its contextual reading. It doesn't just extract the words—it understands how those words relate to each other. A note stuck to a fridge that says "Don't forget the eggs" won't be read as isolated text; it understands it's a reminder. This makes GPT-4o helpful in fields like documentation, translation, or even customer support, where users submit screenshots instead of typing issues.

While GPT-4o isn't the first tool that can generate images, it's the ease of doing so from plain text that matters. You can describe what you want, and the API will return an image to match the prompt. No layers of instructions or multiple tools are needed.

Common uses:

What's more, the model allows image editing too. Upload an image and ask for changes—it could be a color change, object removal, or stylistic tweak. It works like a visual assistant that understands what you want to be changed without needing pixel-perfect instructions.

This is where GPT-4o feels like it’s reading your mind. Feed it both an image and a prompt, and it connects the two without skipping a beat. You can show it a complex diagram and ask questions about it. Or give it a product photo and ask for a description, headline, or SEO tags.

How it helps:

One major win here is how it deals with ambiguity. Instead of needing everything to be crystal-clear or formatted, the model works well with casual inputs. You don’t need to prep every image or label every detail. Just pair the image and question, and it processes the link on its own.

Getting started with the GPT-4o API doesn’t need to be complicated. Here’s a simple breakdown:

Sign in to your OpenAI account and head over to the API dashboard. Once there, generate an API key. This is your access token for making requests. Select gpt-4o as your model from the list. Make sure your project environment supports it. If you're using the Python SDK, your model call might look something like this:

python

CopyEdit

openai.ChatCompletion.create(

model="gpt-4o",

messages=[{"role": "user," "content": "Explain relativity in simple words"}]

)

Depending on the use case, your input could be plain text, an image, or both. For text-only prompts, it’s business as usual. For visual tasks, attach an image using base64 encoding or file upload through the supported method.

Example with image and text:

python

CopyEdit

{

"model": "gpt-4o",

"messages": [

{"role": "user," "content": [

{"type": "text," "text": "What is written on this label?"},

{"type": "image_url", "image_url": {"url": "your_image_url"}}

]}

]

}

The output comes as structured JSON. For text, you’ll get a clean string. For images, it’ll be a URL or file output, depending on how you requested it. Use that in your app, site, or report. Keep track of usage. GPT-4o is designed to be lighter and faster than some previous versions, but API costs still apply. Use logging or a dashboard to monitor your calls and make adjustments if needed.

GPT-4o isn’t just another iteration of a language model. It’s the first real attempt at making AI respond to the world the way humans do—visually, verbally, and contextually. Whether you’re dealing with text, images, or both, the fact that one model can handle it all makes your workflow easier and quicker.

And if you're building something that needs clarity, speed, and multi-format understanding, using GPT-4o through its API might be one of the more straightforward upgrades you can try this year.

Advertisement

Want a free coding assistant in VS Code? Learn how to set up Llama 3 using Ollama and the Continue extension to get AI help without subscriptions or cloud tools

What if one AI model could read text, understand images, and connect them instantly? See how GPT-4o handles it all with ease through a single API

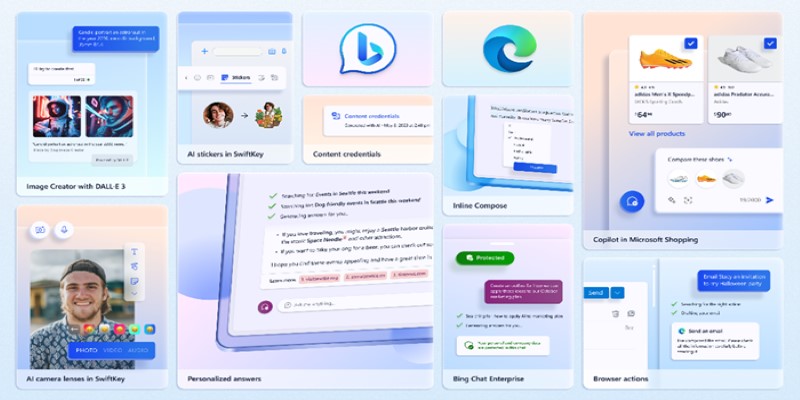

Want to turn your words into images without paying a cent? Learn how to use DALL·E 3 for free inside Microsoft Bing, with zero design skills or signups required

Want sharper, quicker AI-generated images? Adobe’s Firefly Image 3 brings improved realism, smarter editing, and more natural prompts. Discover how this AI model enhances creative workflows

What predictive AI is and how it works with real-life examples. This beginner-friendly guide explains how AI can make smart guesses using data

Need clarity in your thoughts? Learn how ChatGPT helps create mind maps and flowcharts, organizing your ideas quickly and effectively without fancy software

Need help setting up Microsoft Copilot on your Mac? This step-by-step guide walks you through installation and basic usage so you can start working with AI on macOS today.

Want to measure how similar two sets of data are? Learn different ways to calculate cosine similarity in Python using scikit-learn, scipy, and manual methods

Looking for smarter ways to code in 2025? These AI coding assistants can help you write cleaner, faster, and more accurate code without slowing you down

Discover how AI and DevOps team up to boost remote work with faster delivery, smart automation, and improved collaboration

Curious about where AI is headed after ChatGPT? Take a look at what's coming next in the world of generative AI and how chatbots might evolve in the near future

Looking for the best places to buy or sell AI prompts? Discover the top AI prompt marketplaces of 2025 and find the right platform to enhance your AI projects