Advertisement

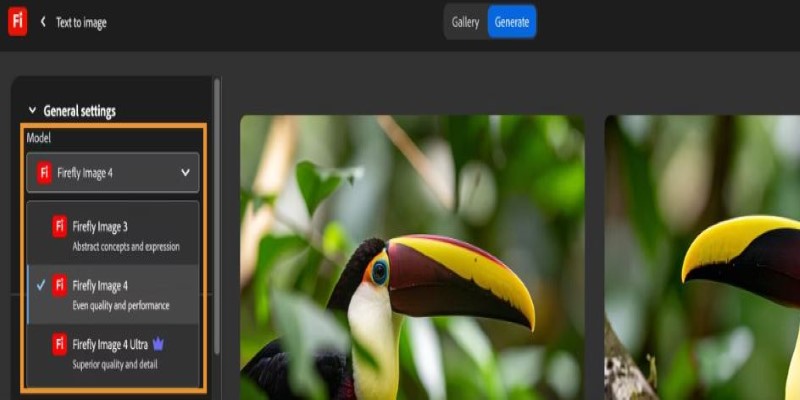

If you're someone who has used AI tools for images—whether to play around with ideas, design something from scratch, or just experiment with what these models can do—then Adobe's latest drop might catch your attention. Firefly Image 3 has officially landed, and it's showing real signs of how far image generation has come and where it might be heading next. It's sharper, quicker, and noticeably better at grasping the context you feed it. But let's break down what's actually new.

The jump from Firefly 2 to Firefly 3 isn’t just about better pictures. It’s how the model understands the prompt you give it. You no longer have to over-explain or feed it five variations just to get one decent output. Firefly Image 3 handles nuance better. You can ask for a cozy living room with soft lighting and wooden textures, and the result will probably come back looking exactly like what you had in mind. There’s more awareness of depth, proportion, and mood.

The earlier models often felt like they were guessing—and sometimes doing a decent job of it. This one feels like it's following along with you. The textures look cleaner. Shadows fall where they should. Faces, which used to be hit or miss, are now more consistent and expressive. You'll notice it, especially when you work with complex environments or subjects in motion. There's less of that awkward stiffness that used to give away the fact that the image came from a machine.

One of the biggest draws of Firefly Image 3 is how much more responsive it is when it comes to editing images with text. Adobe has built-in new tools that don't just generate from scratch—they can also take what you’ve got and shift it in ways that feel natural. You can tweak a character’s pose or change the lighting in a scene without wrecking the whole composition.

Text-to-image generation still gets the spotlight, but the updates in inpainting and editing can’t be ignored. You can now describe an object or effect you want to add or remove, and the AI doesn’t just throw something random into the frame. It actually places it in a way that fits. Want a mug of coffee added to a desk scene? Firefly 3 knows it shouldn’t float mid-air or sit halfway off the edge. The spatial awareness is stronger, and the color balance doesn’t look patched in.

That means quicker edits and fewer do-overs, which is especially helpful when you're on a deadline or just don't feel like wrangling layers and masks in Photoshop for an hour. It's clear that Adobe is leaning toward the idea of AI as a support tool rather than a full takeover, which makes sense for its user base.

One thing that stands out about this version is how it handles style. You don't have to pick between something hyper-realistic or cartoonish anymore. Firefly Image 3 gives you more flexibility in tone and texture. Want a hand-drawn feel with accurate lighting? It can do that. Prefer something that looks like a polished 3D render? That's on the table, too. The range is wider, but what really matters is that the results don't feel clunky when you try to blend styles.

The model seems better trained on different lighting types, too. Scenes shot at golden hour actually look like golden hour now—not just a vague orange filter. And if you ask for rain on glass, you won't just get a few smudges and hope for the best. The water droplets show up with detail, and the reflections behave like they would in real life.

There's also more detail in objects that used to get glossed over. Think fabric folds, tree bark, and paper textures—Firefly Image 3 doesn't blur them out as easily. If anything, it's easier to go too far with detail now, which means you'll want to be more specific about tone when crafting your prompts. Otherwise, you might get something that's technically perfect but doesn't match the mood you wanted.

Let's be honest: with some AI models, writing prompts feels like talking to a search engine that might not understand you. That's less of a problem now. You can describe a scene as you would to another person—say, "a narrow cobblestone alley in the early morning with fog" or "a dog leaping into a pond on a sunny day"—and Firefly 3 handles it with more context than before.

It’s better at filling in the blanks without going rogue. You don’t need to micromanage every adjective or keyword. And when it doesn’t nail it the first time, the variations you get afterward actually feel like versions of your original idea, not something completely different.

Adobe has clearly worked on refining how prompts translate into images. The model picks up on spatial relationships and action words more clearly, which means you can describe movement or atmosphere and get something that reflects it. For people who aren’t prompt-engineering experts—and let’s be real, most people aren’t—that makes a huge difference.

Firefly Image 3 doesn’t reinvent how AI image generation works—but it’s a solid leap forward in how smoothly it fits into creative workflows. It listens better, thinks clearer, and messes up less often. That’s a win if you’re someone who spends time tweaking AI images to get them just right. The more it understands from the start, the less time you waste fixing what went wrong. It’s easy to see Firefly 3 becoming the go-to choice for designers and illustrators who want control without needing to constantly fight the tool they’re using.

Advertisement

Need clarity in your thoughts? Learn how ChatGPT helps create mind maps and flowcharts, organizing your ideas quickly and effectively without fancy software

Which machine learning tools actually help get real work done? This guide breaks down 9 solid options and shows you how to use PyTorch with clarity and control

Want sharper, quicker AI-generated images? Adobe’s Firefly Image 3 brings improved realism, smarter editing, and more natural prompts. Discover how this AI model enhances creative workflows

What if one AI model could read text, understand images, and connect them instantly? See how GPT-4o handles it all with ease through a single API

Ever imagined crafting an AI assistant tailored just for you? OpenAI's latest ChatGPT update introduces custom GPTs, enabling users to design personalized chatbots without any coding experience

Veed.io makes video editing easy and fast with AI-powered tools. From auto-generated subtitles to customizable templates, create professional videos without hassle

Explore hyper-personalization marketing strategies, consumer data-driven marketing, and customized customer journey optimization

Looking for the best chatbot builder to use in 2025? This guide breaks down 10 top tools and shows you exactly how to use one of them to get real results, even if you're just starting out

Think building a machine learning model takes hours? With ChatGPT, you can create, run, and test one in under a minute—even with no coding experience

Need help setting up Microsoft Copilot on your Mac? This step-by-step guide walks you through installation and basic usage so you can start working with AI on macOS today.

Looking for a smart alternative to Devin AI that actually fits your workflow? Here are 8 options that help you code faster without overcomplicating the process

Want to create music without instruments or studio gear? Learn how to use Suno AI to turn simple text prompts into full songs, with vocals, backing tracks, and more