Advertisement

ChatGPT sparked real excitement when it showed how natural AI could feel—drafting emails, solving problems, even writing in your favorite tone. But now that the hype has cooled, the bigger question is what comes next.

Generative AI is only getting sharper, and chatbots are learning to respond more like people. So, are we heading toward digital tools that truly understand us—or just smarter text generators? Let’s take a quick look at where things are going.

Right now, most generative AI models—ChatGPT included—rely on text prediction. They learn patterns from huge amounts of data and respond based on what they think is the most fitting next word or sentence. This is why they sound fluent and helpful but occasionally say things that don't make sense or seem a little off.

But the future is pushing toward something deeper. Developers are training AI to understand context not just from the words you type but from your behavior, history, tone, and even visual cues. Instead of just generating a good-sounding sentence, future bots may interpret emotional cues, detect urgency, or pick up on your habits over time.

Think about a chatbot that doesn’t just answer your question but knows when you're having a bad day and changes how it replies. Or one that remembers how you like to explain things—simple and to the point or with extra detail. These aren't far-fetched ideas anymore.

Some early-stage systems are already working on combining text with voice, video, and even facial expression recognition. This could be a game changer for support services, learning tools, and personal assistants.

At one point, there was a clear difference between a chatbot and something like a personal assistant. A chatbot helped you reset your password or answered FAQs. An assistant scheduled your meetings, checked your calendar, and knew your preferences.

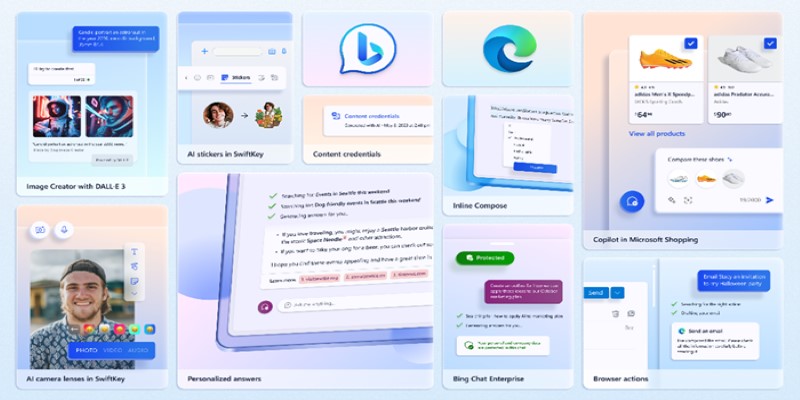

Now, those lines are fading fast. The next generation of generative AI aims to blend knowledge, memory, and functionality. Imagine a chatbot that not only answers your question about a new phone but remembers your budget, compares it with your previous choices, checks your calendar for delivery windows, and even completes the order—all in the same thread.

Instead of opening ten different apps, you could have one tool that talks to your bank, knows your fitness goals, helps plan your meals, and coordinates your travel plans. All while keeping the conversation smooth and natural. Not robotic. Not generic. Just how you like it.

Of course, this raises questions about privacy and control. However, developers are already working on ways to keep these tools both helpful and secure. Local processing, opt-in memory, and transparent data handling are being baked into many new systems.

Right now, most people interact with AI through words. But humans aren’t wired to rely on words alone. We read expressions, notice pauses, look at images, and move our hands when we talk. That’s where multimodal AI comes in.

Instead of only focusing on text, new models are learning to understand and generate across different formats—images, video, audio, and even code. Some tools can already generate diagrams from simple instructions or turn a rough sketch into a clean design. Others can take a voice memo, pick out the key points, and summarize them into neat meeting notes.

For users, this means the experience will become much more natural. Want to explain something tricky? Upload a photo or record a quick voice note. Want a summary? Ask for it in chart form. Whether you’re working on a design, researching a topic, or just trying to figure something out, future AI won’t need you to explain it in just one way. It will meet you where you are.

This shift to multimodal interaction may also help solve one of the biggest challenges of today’s AI tools: how to make them useful to people who don’t want to sit and type long prompts or read walls of text.

One thing that still feels clunky with current AI is how it handles long or complex conversations. Sometimes, the chatbot loses track of what was said earlier. Or it gives a great answer but can’t follow through on the next step.

This is an area where major improvements are expected. Developers are working on tools that keep memory across sessions so the AI can build a longer-term understanding of the user. It could remember your preferences, past interactions, and even unfinished tasks. Not in a creepy, always-watching kind of way—but in a helpful, don't-make-me-explain-it-again kind of way.

Goal-oriented dialogue systems are also on the radar. These are designed not just to chat but to complete a task with you. Say you're planning a trip. Instead of just telling you what flights are available, a smarter AI could help compare prices, track luggage options, suggest nearby hotels, check your time zone preferences, and book everything in one go. All through a conversation.

It's about making chatbots not just responsive but truly useful from start to finish.

While ChatGPT gave us a glimpse of what’s possible, it’s clear that it’s just a stepping stone. The real excitement is in what comes next. We’re heading into a time where chatbots don’t just respond—they understand. They learn from you, work with you, and adapt to your world.

Whether it's the way they process visuals, the way they speak, or how well they remember what you care about—AI tools are going beyond generic answers and moving toward real support. Not just smart but helpful. And not just helpful but human-like.

So next time you ask your chatbot a question, think about this—it might soon be the smartest conversation you’ll have all day.

Advertisement

Think building a machine learning model takes hours? With ChatGPT, you can create, run, and test one in under a minute—even with no coding experience

Looking for the best chatbot builder to use in 2025? This guide breaks down 10 top tools and shows you exactly how to use one of them to get real results, even if you're just starting out

AI accelerator chips boost speed, lower costs, and make artificial intelligence more accessible for businesses and students

Want sharper, quicker AI-generated images? Adobe’s Firefly Image 3 brings improved realism, smarter editing, and more natural prompts. Discover how this AI model enhances creative workflows

Want to turn your words into images without paying a cent? Learn how to use DALL·E 3 for free inside Microsoft Bing, with zero design skills or signups required

Want to measure how similar two sets of data are? Learn different ways to calculate cosine similarity in Python using scikit-learn, scipy, and manual methods

Looking to boost your chances on LinkedIn? Here are 10 ways ChatGPT can support your job search, from profile tweaks to personalized message writing

Which machine learning tools actually help get real work done? This guide breaks down 9 solid options and shows you how to use PyTorch with clarity and control

What if one AI model could read text, understand images, and connect them instantly? See how GPT-4o handles it all with ease through a single API

Want a free coding assistant in VS Code? Learn how to set up Llama 3 using Ollama and the Continue extension to get AI help without subscriptions or cloud tools

How can AI help transform your sketches into realistic renders? Discover how PromeAI enhances your designs, from concept to portfolio-ready images, with ease and precision

Looking for smarter ways to code in 2025? These AI coding assistants can help you write cleaner, faster, and more accurate code without slowing you down